The Beginnings of Animations

- nathanenglish

- Feb 19, 2023

- 2 min read

Now that Raith can talk and move his lips, it's time to start making him more lifelike and less robotic (the voice is already robotic enough) with some animations.

The Unity Animator

I'm somewhat familiar with Unity's animator state machine. I've used it before, just following along with tutorials years ago, but every time I use it I feel like I have to relearn it all over again. Anyway, knowing some of its properties (and having a bunch of discarded projects to reference) will be useful here.

I grabbed a couple animations off of Mixamo for an idling and talking. These are easy enough to apply. Unity will automatically make an Avatar upon importing your FBX/.blend file, and the animation FBX files just get dragged into the animation graph, also automatically generated.

And boom, Raith will automatically Idle as soon as you hit play. From here you can add other animations and define the conditions required to switch between them. So for example, here is where I'd also add animations for when he starts talking, and when those conditions are supposed to trigger.

Unity allows you to add different layers so that your graphs don't get all clogged up. I've created different layers for eye blinking (which will happen randomly regardless of whatever other animations might be playing) and for his face emotions.

BlendShape animation

I added a couple more Blend Shapes to the mesh for making facial expressions. I created a blink, which can be adjusted between 0%-100% to account for things like squinting. I also adjusted his eyebrows, one for an angry face and one for a sad face. The eyebrows are controlled individually so that I can do partial expressions (like an inquisitive eyebrow raise using only one eyebrow).

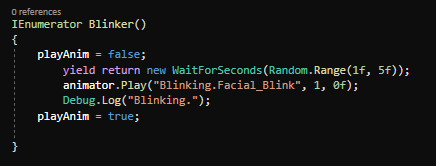

The blinking animation is just one state, controlled via Coroutine to occur at random intervals of 1-5 seconds.

The facial expressions are currently controlled by booleans so I could test that they work. But the tree looks like this--The animation begins on an empty state where nothing happens, but if the conditions "isAngry" or "isSad" are met, it will transition to its respective facial animation. once the conditions are false, it will go back to the default empty state.

All together, we get these!

Eye Tracking

I wanted to make sure his eyes actually followed the camera so that when he moves around or gestures as he talks, his eyes are still looking at the user. I had to go back into Blender and add bones to his eyeballs.

After that, the eye bones can be moved via script, and told to follow the main camera in Unity. It's good practice to put anything dealing with animations in LateUpdate() rather than Update(), as late update gets called after any physics has been applied; i.e. your animations won't get messed up by movement calculations that get called beforehand. ( I had to multiply my rotations by 90 degrees on the X axis cause of a weird rotation problem, but it works now so whatever).

Now, no matter how much I move the camera around, or how much he moves his head around, his eyes will look at you! The texture needs to be fixed to include his entire iris now.

Comments