Visemes and Lipsync Setup

- nathanenglish

- Feb 14, 2023

- 1 min read

Updated: Feb 19, 2023

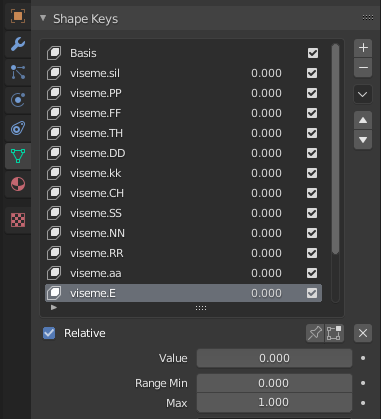

To get my model talking, I have to make all the visemes--aka, the mouth shapes we make while talking. Oculus Lipsync uses 15 visemes, and all of the shapes have to be recreated on my model in Blender. Luckily it didn't take too long, maybe a solid hour.

Here's a gif of a couple of the mouth shapes.

Next, the FBX gets imported into Unity where the Oculus Lipsync components get added on. The scripts are responsible for analyzing an Audio source and translating / animating the words using the visemes I made.

Here's what that component looks like, and it controls each of the visemes that came imported with the model. It even has laughter detection, if you made a viseme for laughter.

Paired with the text to speech, it comes together like this (video below). The next step is to add some animations so he doesn't look so unalive and robotic. He takes some time to think and translate, so I think a little animation to show that he's thinking will be a nice addition.

Comments